A few months ago rOpenSci’s Scott Chamberlain asked me for feedback about a new package of his called crul, an http client like httr, so basically something you use for e.g. writing a package interfacing an API. He told me that a great thing about crul was that it supports asynchronous requests. I felt utterly uncool because I had no idea what this meant although I had already written quite a few API packages (for instance ropenaq, riem and opencage).

So I googled the concept, my mind was blown and I decided that I’d trust Scott’s skills (spoiler: you can always do that) and just replace the httr

dependency of ropenaq by crul. Why? First of all note that Crul is a planet in Star Wars whose male inhabitants are called crolutes which sound quite cool (there are female ones as well, called gilliands which doesn’t sound like the package name) and which I now use as a synonym for “user of the crul package”. But I had other reasons to switch… that was the subject of my lightning talk today at the French R conference in Anglet. In this blog post I’ll tell the story again, with a bit more details, in the hope to make you curious about crul!

Pic by ThinkR, thanks Colin/Diane/Vincent!

Asynchronous requests for dummies like me

OpenAQ API, which is a fantastic source of open data about air quality (see my blog post about ropenaq on rOpenSci’s blog), has a pagination system. If you want say all ozone measures for one country, the API will produce several pages of results containing 10,000 measurements each, and for each of these pages you’ll need to make a request.

In theory, you can either do all these requests one after the other (synchronous requests, yes synchronous) or all of them at once or at least in bunches, which corresponds to asynchronous requests. The crul package supports asynchronous requests while httr doesn’t. As you can imagine, sending several requests at once can increase speed!

Of course, you can’t send too many requests at once to an API, either because it’s not allowed anyway, or at least because it’s not a really nice thing to do: you might put an API in trouble by asking too many things in one go and you probably don’t want to break things. I asked OpenAQ’s co-founder Joe Flasher whether I could use asynchronous requests, thinking he might say no. That was funny because I actually thought the API was frailer than it is, Joe told me it was fine to do up to 10 requests at once, so I started doing that.

A big change for ropenaq

So I started removing all calls to httr functions by calls to crul, which wasn’t too hard really because crul has two vignettes, in particular one about asynchronous requests. I like the style of the vignettes, I could recognize the style from R for cats. Besides, Scott had asked for feedback and I assumed that asking many questions would just help him make the documentation fool-proof. Thanks for your patience Scott!

You can see the crul calls of ropenaq here. I have no ambition to write a step by step but basically what happens now in ropenaq is:

-

if the user provides a page argument, then I do a simple request for that page thanks to an

crul::HttpRequestobject. In the past as an user ofropenaqfor any data wish you had to first had to make a query to get the count of measurements and then write a loop over all pages. Now you don’t, see the following point. -

if the user doesn’t provide a page, then I retrieve the number of measurements for the query in total, then count the pages and prepare one query by page, which I group in bunches of 10 queries. Each bunch, which is a list, is then used to create an

AsyncVariedobject viacrul::AsyncVaried$new(.list = async), and then I use methods of that object to retrieve the status and the content.

The only methods I needed to use where new to create objects, request to actually make the requests, status_code to retrieve the request status, get to extract the content of the request output when it had worked and parse to well parse the contents. Their names are quite well chosen. For figuring all of that out I read the vignettes, the documentation of the classes and as mentioned previously asked questions to Scott. In particular at the beginning I wasn’t too sure how to make the 10 requests for different pages at once but as the async vignette of crul says,

“There are two interfaces to asynchronous requests in crul:

-

Simple async: any number of URLs, all treated with the same curl options, headers, etc., and only one HTTP method type at a time.

-

Varied request async: build any type of request and execute all asynchronously.”

and my case was the second one. I was also a bit puzzled as to how to pass my list of requests to the function creating the AsyncVaried object but it turned out you can just pass a list to the .list argument.

Now the CRAN version of ropenaq is a proud reverse dependency of crul (yes my packages have feelings), so if you do install.packages("ropenaq") you can take advantage of my hard work. In my talk I had the results of a small microbenchmark and long story short as you can imagine the total time needed to retrieve data is shorter when you use asynchronous requests.

Other arguments in favour of crul

So asynchronous requests are a big argument in my opinion to use crul instead of httr as an API package developer but I’ve also listed a few other reasons. Note that I don’t get any money for saying any of this, this is my most honest opinion.

R6 classes!

You might have noticed I talked about creating objects and then using methods. crul is based on R6 classes so uses object-oriented programming. You can do things with these objects thanks to the methods. In httr, everything is supported by functions. This is an argument for using crul if:

-

you like R6 classes or object-oriented programming in general.

-

like me you’ve not used a lot of object-oriented programming but you’re curious to discover them. It was really not scary. Besides I love the fact that once you’ve created an object, in RStudio thanks to auto-completion you can start typing “$”, then press tab and see all the methods of that object.

Furthermore, each time I called an httr function in my package code I wrote httr:: before the name of the function to specify the import. With crul you do that only when creating an object, after that when you use the methods of that object you don’t need to declare any more import. So now I can be a bit lazier!

The promise of mocking made easy quite soon!

The unit tests of an API package might fail because your code no longer works… or because the API changed its output or is just down. A solution to differentiate these failure reasons is to have tests calling the API, and tests using fake data, the latter being mocked tests. This is discussed in this very good thread. Right now crul supports mocking via webmockr, and if I understood correctly will soon support mocking in an easier way via vcr. When it does I’ll try to make myself wiser about mocking which I don’t use at all currently.

Conclusion

So overall if you’re an API package developper you should consider giving crul a try! I’ll keep using httr in my webscraping and well it is still a dependency of all my other API packages so I like both packages.

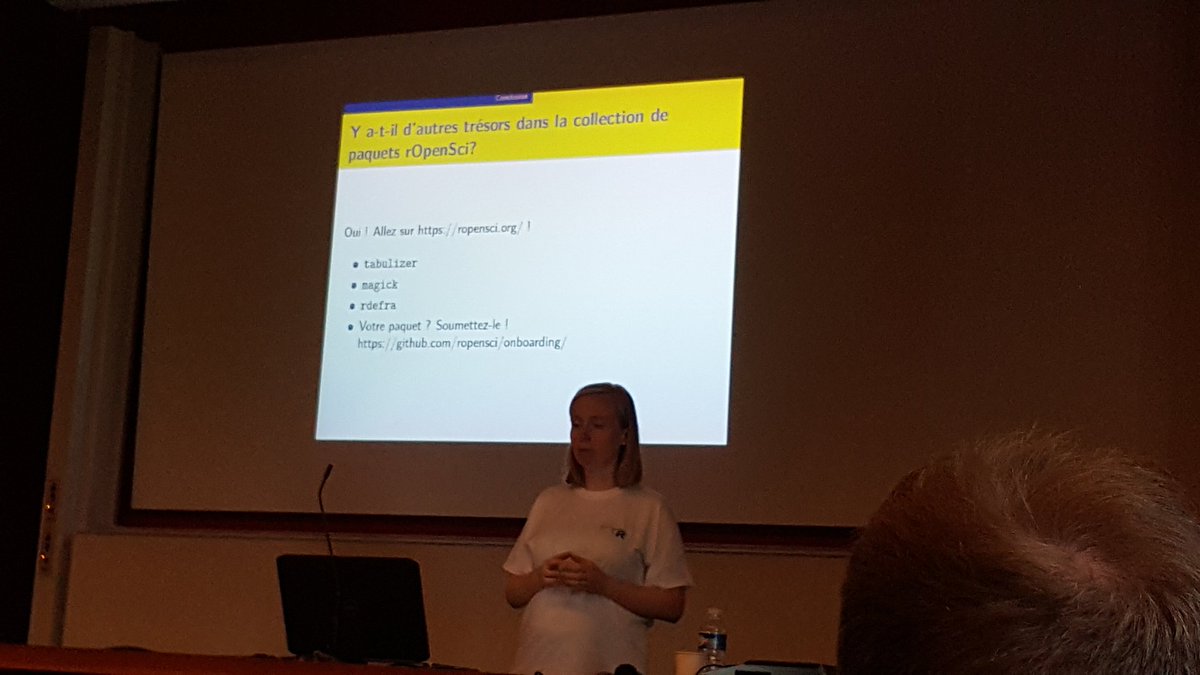

Pic by Aline Deschamps, on that slide I was merely advertising for rOpenSci and in particular its onboarding process for packages

At the French R conference I was happy to get to present the reasons why I became a crolute. I also enjoyed meeting new people, some of them I knew from Twitter (Colin Fay who actually gave a talk about API packages, Diane Beldame who lead a tutorial about the tidyverse, Vincent Guyader who made the use of Docker to encapsulate a Shiny app look really easy, all of them from ThinkR, Joël Gombin who gave a keynote about data journalism and Aline Deschamps from Dacta), many others I didn’t know at all, for instance the real guy behind The R graph gallery who made a cool project using tweets about surfing. I even got to see Nick Tierney again, who gave a talk about his awesome narnia package for handling missing data. The talks were great, some findings were shared via the #angletr2017 hashtag on Twitter.

We heard that the next French R conference will be in Rennes which is in Brittany, my beloved home region, so I’m already hoping I can go next year, as an human or a crolute or whatever!